What Most Miss When Evaluating Call Center Quality Assurance Software

A lot of contact center leaders still treat quality assurance as a box to tick off. They invest in leading call center quality assurance software, run through vendor demos, check off basic features like recording and AI scoring, then wonder why their CSAT barely moves. Yet, even with 95% of companies saying measuring call center metrics is important for improving customer satisfaction, the typical QA approach focuses on monitoring compliance rather than driving the behavior changes that will enhance the customer experience.

Call center quality assurance software can and should be your lever for better CX, lower costs, and compliant operations. But, even in specialized call center outsourcing companies, most teams choose tools based on surface-level checklists and completely overlook the factors that actually determine whether their QA program will produce results or become another underused system collecting digital dust.

What Do Most Teams Look At First When Evaluating QA Software (And Why It's Not Enough)?

During most QA software evaluation meetings, teams mostly look at features such as call recording, automatic transcription, customizable scorecards, visual dashboards, and some form of AI scoring.

These are table stakes now. Nearly every vendor offers them, making decision-making based on features alone nearly impossible.

The problem isn't that these capabilities don't matter. Recording and transcription let you capture what happened. Scorecards give you a framework to judge interactions. Dashboards surface high-level trends. But these features won't tell you:

- Why did your first contact resolution rate stall at 68%?

- Which specific process gaps are frustrating customers?

- Or how to structure coaching that actually changes agent behavior.

The difference between call center software quality assurance platform that transforms performance and one that produces reports no one reads comes down to factors that rarely make it onto evaluation spreadsheets.

The Problem Of Narrowing Focus on Price and Licenses

Budget conversations dominate software decisions, and call center quality assurance software tools are no exception. Teams scrutinize per-seat licensing costs, compare quotes, and gravitate toward whatever appears cheaper on paper. In the process, they overlook the operational realities of implementation (complex rollouts, steep training requirements, and ongoing administrative effort), which frequently prevent these “affordable” tools from ever being fully adopted.

The consequences show up quickly in how QA programs operate. Typically, QA covers only 1-5% of total interactions, leaving massive blind spots in agent performance and customer experience. So the real cost isn't the license fee – it's the opportunity cost of running a QA program that doesn't scale, doesn't integrate with your stack, and doesn't give supervisors the insights they need to facilitate good customer service.

{{cta}}

The Blind Spots That Undermine QA Software Decisions

Aside from just looking into the standard feature checklist and budget conversations, there are a few other critical blind spots that sabotage QA effectiveness. These gaps are the reason why so many contact centers struggle to put their QA data into measurable improvements in customer experience and operational efficiency.

Blind Spot 1: Treating QA as Compliance Only

Many organizations optimize their QA process exclusively for checking scripts, verifying disclosures, and confirming regulatory adherence. And it’s not wrong, per se, but such a compliance-first mindset leads to scorecards that don’t really measure performance. They can show whether agents said the right words, but miss whether they actually solved the customer's problem. And believe it or not, agents learn to game these scorecards by hitting the talk-track checkboxes while providing mediocre service.

Call centers that organize feedback within clear frameworks can see up to a 25%-30% boost in agent productivity. But that improvement only comes when QA evaluates what actually drives customer satisfaction metrics:

- Empathy

- problem-solving effectiveness

- effort reduction

- and full case resolution.

When QA focuses solely on scripts, teams miss the root causes of poor customer service that damage relationships and drive churn.

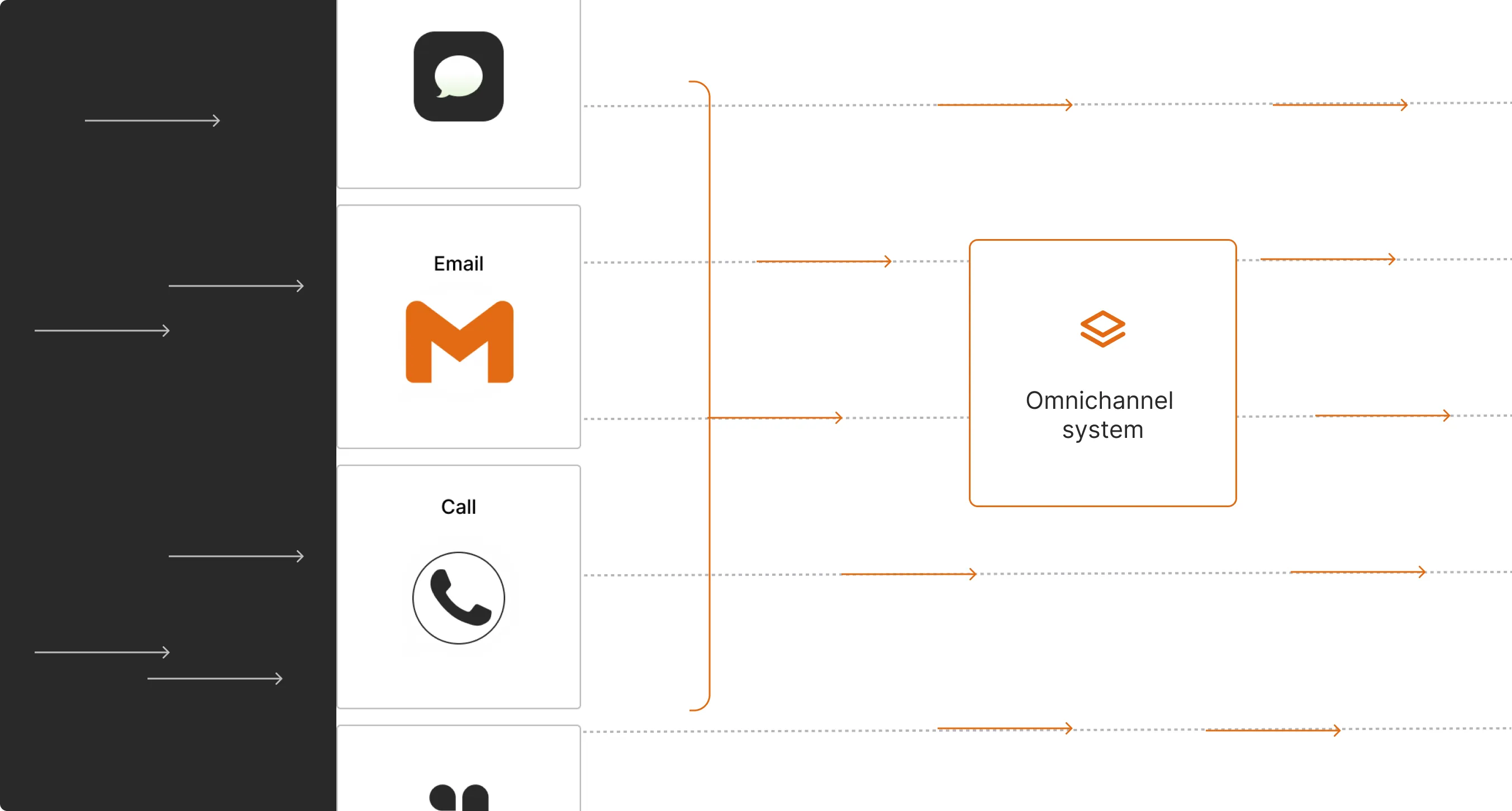

Blind Spot 2: Ignoring Data Foundations and Integrations

Maybe not in your company (hopefully), but QA tools often become isolated silos, disconnected from all the major touchpoints that contain crucial context:

- Telephony system

- Customer support channels

- CRM

- Help desk

- And workforce management platforms.

Without integration, your AI scoring engine won’t see that the "difficult" customer on the call just had three missed calls yesterday, or that the agent resolved a complex issue that normally requires escalation. Similarly, QA systems disconnected from your customer support knowledge base can't identify when agents provide outdated information or miss opportunities to reference helpful resources.

When data is fragmented, it’s easy to misinterpret agent performance. An agent who spends eight minutes on a call might look inefficient in isolation but exceptional when you know they prevented a cancellation and identified an upsell opportunity. Strong integration capabilities – deep API access, native connections to major platforms, flexible data export options – should be core evaluation criteria, not afterthoughts buried in technical appendices.

Blind Spot 3: Underestimating Workflow and Coaching Fit

Most buyers evaluate QA software through an administrator’s lens during vendor demos. They’re shown polished interfaces and sleek dashboards, but rarely test what matters day to day: whether supervisors can quickly spot coachable moments or whether agents can easily access their own performance data without clicking through multiple screens.

Clunky evaluation workflows kill adoption faster than missing features. If reviewing a call takes supervisors 15 minutes instead of 3, they'll review fewer calls. If agents can't easily understand why they scored poorly on empathy, coaching sessions become simply negative review responses and defensive arguments instead of development conversations. Call center managers believe improving job satisfaction can improve customer satisfaction scores by 62% and boost efficiency by 56%, but those improvements require coaching workflows that supervisors actually use, and agents can benefit from.

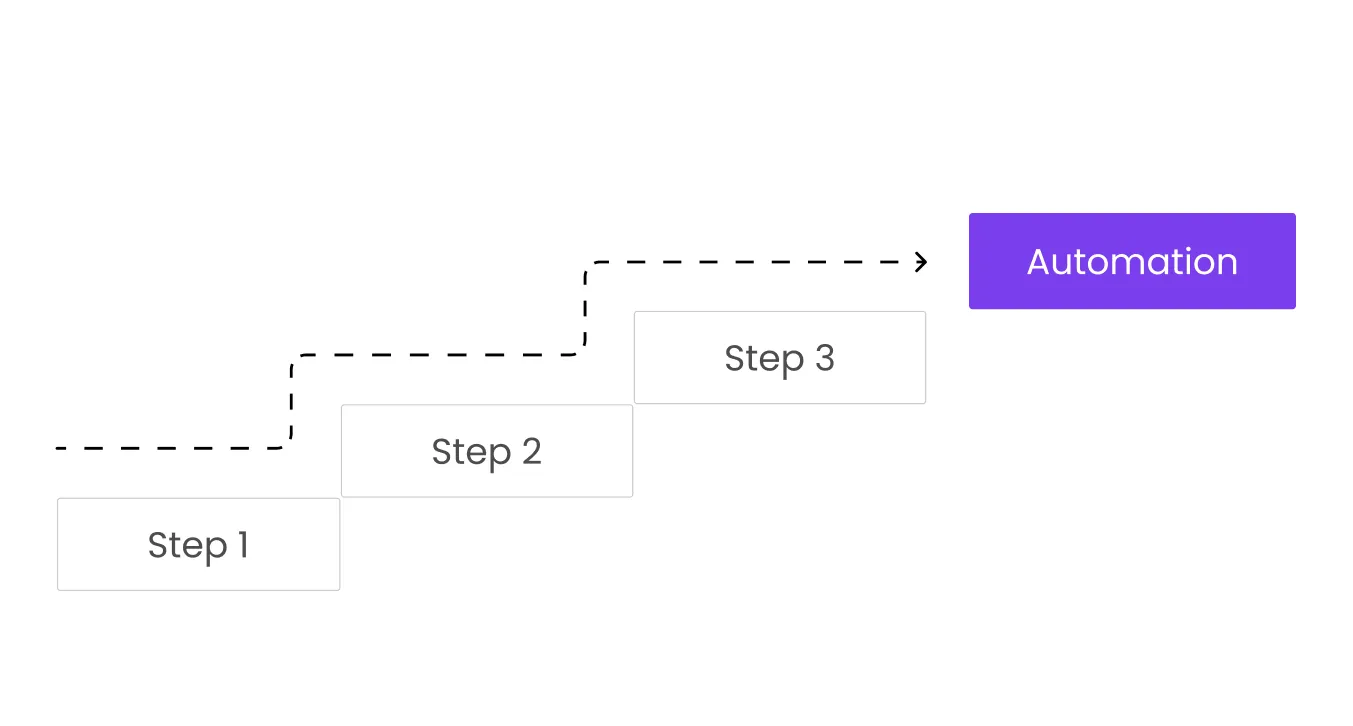

Blind Spot 4: Over-trusting "100% Automated QA"

Vendors love promoting fully automated scoring as the solution to manual QA's limitations. And yes, AI can analyze every interaction instead of sampling 2%, which creates valuable visibility. But over-reliance on automated scoring creates new problems: superficial metrics that miss context, false confidence in numbers that lack nuance, and the dangerous assumption that high automation scores equal good service.

While AI-powered QA can evaluate nearly 100% of all interactions, it still struggles with nailing down the subjective elements like tone appropriateness for specific situations or the judgment call about when to bend a policy to save a customer relationship.

The most effective approach combines AI pattern detection with human review, with automation handling roughly 60%–80% of your support volume for optimal results. This way, you can still use “full” automation to surface outliers and coaching opportunities, while leaving the reins for interpretation and final judgment to humans. Still, even in the case of combined efforts, it’s best to thoroughly plan your AI automation. Because the more you want to delegate to your AI agents, the tighter the integration you need to organize.

The Evaluation Criteria That Actually Matter

As such, we need to reframe how you evaluate QA software. Below, we’ve collected a couple of criteria that can help you separate tools that drive performance improvement from tools that simply monitor it.

Criterion 1: How Well It Measures What "Good" Looks Like for You

Generic scorecards and default templates won't move the needle on your specific customer service standards. The best call center quality assurance software supports your unique definitions of quality through:

- flexible scoring frameworks

- multichannel evaluation forms

- and calibration workflows that maintain consistency across reviewers.

These features allow you to build scorecards that account for customer-centric outcomes. Consider the following:

- Can you score for first contact resolution effectiveness?

- Can you measure empathy in ways that align with your brand voice?

- Can you differentiate evaluation criteria for inbound calls vs outbound calls or adjust scoring based on interaction complexity?

During vendor evaluations, bring your actual scorecards and use cases. Ask software providers to demonstrate how their platform would handle your specific scenarios, not their sanitized examples. The difference between what vendors show in demos and what you'll experience in production often determines implementation success.

Criterion 2: Depth of Analytics and Insight, Not Just Dashboards

Simple averages and QA pass rates tell you almost nothing useful. Nowadays, you need interaction analytics that identify root causes, detect emerging trends, and connect QA scores to business outcomes.

Thus, ask yourself:

- Can the system perform sentiment analysis across customer interactions?

- Does it cluster topics to reveal what's driving dissatisfaction?

- Can it show you which process gaps or product issues create the most friction?

With truly advanced customer service data analytics capabilities, you should be able to answer questions like: "What's causing the spike in complaints about billing?" in minutes, not days. You should be able to drill from a trend to specific conversations to the exact moment in a call where things went wrong. This analytical depth will transform your QA from backward-looking reporting to forward-looking proactive customer service.

Test how quickly you can move from identifying a pattern to understanding its cause. If that process requires exporting data to spreadsheets and manual analysis, the tool isn't sophisticated enough for modern business needs.

Criterion 3: Coaching, Feedback, and Behavior Change

Quality assurance only matters when it changes frontline behavior through targeted, timely coaching. Your QA software should include:

- built-in coaching workflows

- shared evaluations with contextual comments

- follow-up task management, and progress tracking over time.

The best implementations create feedback loops where agents see their scores immediately after interactions, understand what drove those scores, and receive specific coaching tied to real examples. This personalized customer service approach to agent development accelerates skill building and helps maintain motivation. Strong QA programs also leverage customer feedback systems to close the loop between quality evaluations and actual customer sentiment.

Following this, look for platforms that link QA findings directly to learning management systems or knowledge base articles:

- When an agent scores low on product knowledge, can the system automatically recommend specific training modules?

- When patterns of declining performance emerge across a team, can supervisors launch targeted coaching campaigns instead of scheduling individual meetings?

These systematic feedback mechanisms separate QA tools that drive improvement from those that simply document problems.

Criterion 4: Fit With Existing Stack and Future Roadmap

Integration with your telephony platform, CCaaS, CRM, help desk, workforce management, and business intelligence tools is a prerequisite for scalable QA. You need:

- open APIs

- single sign-on capabilities

- role-based access control

- and the ability to export quality data to your data warehouse for long-term analytics.

Additionally, consider how the vendor’s product roadmap aligns with your future plans, whether it’s AI assistants, omnichannel customer service, or evolving compliance needs. Consider how the platform will work alongside your other digital customer service tools to create a unified technology ecosystem. A vendor releasing major updates every quarter shows they’re actively investing in the platform. One whose roadmap hasn’t changed since last year is likely maintaining, not innovating.

{{cta}}

Current Market: Call Center Quality Assurance Software Comparison

The market is basically oversaturated with QA solutions, but only a few can serve you the type of quality assurance you need. Here's a breakdown of leading call center quality assurance software platforms worth evaluating.

Call Center Quality Assurance Software Features & Recommendations

These platforms are the current market leaders. However, the right choice for your business still depends heavily on your specific requirements, existing technology stack, and organizational priorities.

AI-Powered Call Center Quality Assurance Software Worth Noting

Several specialized AI-native platforms also deserve attention for teams specifically seeking ways to automate their quality assurance processes with high-quality software:

- MiaRec

Offers generative AI-powered automated QA that understands conversation context rather than matching keywords. Their solution covers 100% of interactions with minimal setup, making the software accessible for mid-sized teams wanting AI benefits without massive implementation projects. It can be particularly useful for organizations that need to scale QA quickly. - CallMiner

Delivers time-tested speech analytics with strong topic clustering and sentiment analysis. Their platform excels at identifying patterns even when the interaction volumes are high, making it valuable for large customer support teams analyzing thousands of daily conversations. - Scorebuddy

Combines AI-powered auto-scoring with strong reporting and calibration features. Their GenAI Auto Scoring can scale to 100% coverage, at the same time offering user-friendly interfaces that reduce training time. It's an excellent fit for organizations looking for an opportunity to finally switch from manual QA to automated systems.

Choosing QA Software That Actually Improves CX

The biggest mistake in the evaluation of the leading call center quality assurance software isn't choosing the wrong features. It's treating the decision as a purchase of a simple monitoring tool instead of selecting a change engine for customer experience and performance improvement. Software alone won't fix your QA program. But the right platform makes effective QA scalable, sustainable, and directly tied to business outcomes.

Thus, when finally making a decision on which call center quality assurance software to use, structure your evaluation around measurable improvements you expect over the first 6–12 months:

- specific increases in customer loyalty

- reductions in repeat contacts

- improvements in compliance adherence

- or gains in agent retention.

If you're looking to establish solid QA processes but need expert guidance on implementation, we are here to help! Our team specializes in establishing quality control frameworks for multilingual customer support operations across all major support channels. Book a call with us to discuss how we can help you build a QA program that drives real improvements, not just generates reports.

Quality assurance done right can turn any customer interaction into an opportunity for sale. The software you choose determines whether that potential becomes reality.

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

_%20Strategies%2C%20Tools%20%26%20A%20Client%20Success%20Story%20(3).webp)

.webp)

.webp)

.webp)

.webp)

.webp)

-min%20(1).webp)

.webp)